Futa (Kai) Waseda

PhD Candidate | Trustworthy AI: Robustness, Reliability, and VLM Defense

The University of Tokyo

About Me

I study Trustworthy AI, focusing on adversarial robustness and reliable generalization from mechanism to deployment:

- I analyze why models fail under adversarial perturbation (WACV'23).

- I design robust training and defense methods for computer vision (ICIP'24, ICLR'25).

- I extend these ideas to vision-language systems (WACV'26, ACMMM'25).

- I also work on complementary reliability and security topics, including post-hoc calibration (ICML'23) and model IP protection (ACL'25 Main).

I have collaborated widely across academia and industry through joint research and internships, including work with TUM, NII, NEC, CyberAgent AI Lab, SB Intuitions, and Turing.

Interests

- Trustworthy AI

- Adversarial Robustness

- Robust Generalization

- Vision-Language Model Reliability

Education

BEng in Systems Innovation, 2020

The University of Tokyo

Exchange Student, 2022

Technical University of Munich

MS in Informatics, 2023

The University of Tokyo

News

- 2025.11: One 1st-authored paper accepted at WACV 2026.

- 2025.09: Received NII Inose Outstanding Student Award, 2025.

- 2025.07: One 1st-authored paper accepted at ACMMM 2025.

- 2025.05: One 1st-authored paper accepted at ACL 2025 Main.

- 2025.01: One 1st-authored paper accepted at ICLR 2025.

- 2024.09: One 1st-authored paper accepted at ICIP 2024.

- 2024.05: One 1st-authored paper accepted at MIRU 2024 Oral.

- 2023.09: Selected for research fellowship JST DC2.

- 2023.07: Received a research grant of 1 million yen from AIP Challenge Program, JST.

- 2023.03: Selected for research fellowship JST SPRING GX.

- 2023.04: One 1st-authored paper accepted at ICML 2023.

- 2022.10: One 1st-authored paper accepted at WACV 2023.

Recent Publications

Research strengths

- Defenses for visual and vision-language models under adversarial settings.

- Reliable generalization via calibration and robustness analysis.

- Practical security themes, including model IP protection.

See all publications.

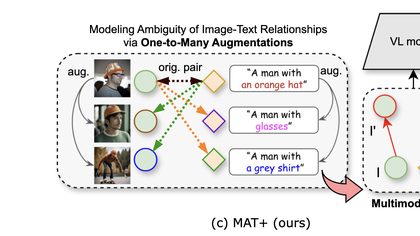

Multimodal Adversarial Defense for Vision-Language Models by Leveraging One-To-Many Relationships

- Targets robust defense for VLMs under multimodal adversarial attacks.

- Leverages one-to-many image-text relationships during adversarial training.

- Improves robustness while preserving clean-task performance.

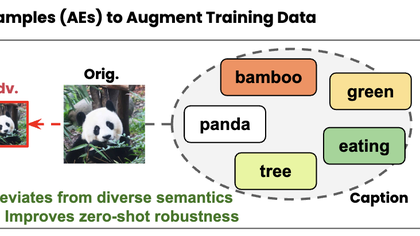

Quality Text, Robust Vision: The Role of Language in Enhancing Visual Robustness of Vision-Language Models

- Proposed QT-AFT to improve VLM robustness with high-quality text supervision.

- Reduced class-overfitting while improving robustness to text-guided attacks.

- Achieved strong zero-shot robustness and accuracy across 16 datasets.

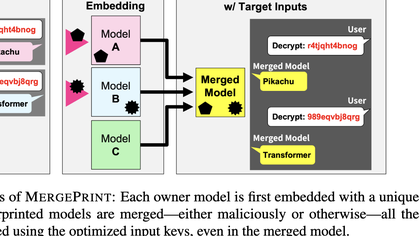

MergePrint: Merge-Resistant Fingerprints for Robust Black-box Ownership Verification of Large Language Models

- Proposed MergePrint for merge-resistant fingerprinting of LLM ownership.

- Enabled black-box verification without requiring model internals.

- Preserved fingerprint detectability after model merging with limited utility loss.

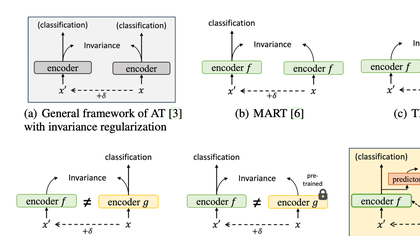

Rethinking Invariance Regularization in Adversarial Training to Improve Robustness-Accuracy Trade-off

- Revisited invariance regularization to improve the robustness-accuracy trade-off.

- Proposed AR-AT with asymmetric loss, stop-gradient, predictor, and split-BN.

- Learned adversarially invariant yet discriminative representations more effectively.

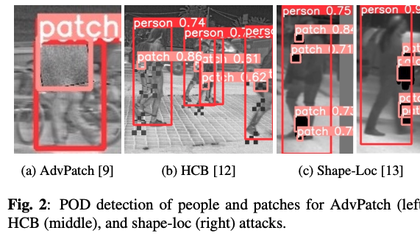

Defending Against Physical Adversarial Patch Attacks on Infrared Human Detection

- First defense study for physical adversarial patches in infrared human detection.

- Proposed POD, an efficient patch-aware training and detection strategy.

- Achieved strong robustness to unseen patch attacks while improving clean precision.

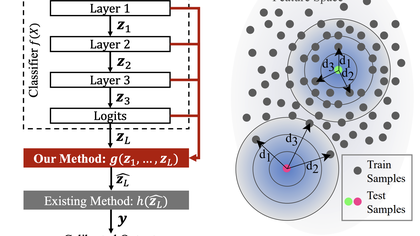

Beyond In-Domain Scenarios: Robust Density-Aware Calibration

- Proposed DAC, a KNN-based density-aware post-hoc calibration method.

- Improved uncertainty reliability under domain shift and OOD conditions.

- Maintained strong in-domain performance across diverse models and datasets.

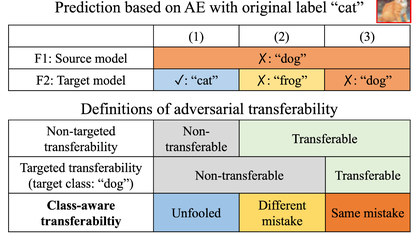

Closer Look at the Transferability of Adversarial Examples: How They Fool Different Models Differently

- Analyzed class-aware transferability by separating “same mistake” and “different mistake” cases.

- Showed that different mistakes can appear even between similar models.

- Linked transfer behavior to model-specific use of non-robust features.

Experience

Research Internship

CyberAgent AI Lab

Research Internship

NEC Corporation

CTO

Madori LABO(まどりLABO)

Research Assistant

National Institute of Informatics

Technical Advisor

Ollo inc.

Awards

NII Inose Outstanding Student Award (NII猪瀬優秀学生賞), 2025

MIRU'24 Student Encouragement Award (MIRU'24 学生奨励賞)

Won the special prize at SAS analytics hackathon 2019.(SAS Institute Japan, The Analytics Hackathon 2019 特別賞)

Won the first prize at MDS data science contest 2018.(MDSデータサイエンスコンテスト 優勝)

Accomplishments

Summer School for Deep Generative Models 2020

Chair for Global Consumer Intelligence (GCI 2018)

Side Projects

Floor Plan App (間取り生成アプリ)

Floor Plan App is a web application that generates a floor plan from a given request.

Twitter Image Captioning

Made a model which outputs text from a image like human tweets, using Encoder-Decoder Model. Application of image captioning technique.

Oshaberi-Bot(おしゃべりぼっと)

My first twitter bot app. He learns japanese from his followers, by fitting retrieved data to Markov model.

trip map

Demo web application I made in school. In this app, you can clip the place you want to go in the future, find the shortest way to go through the chosen spots. I was responsible for front-end system using html, css, javascript.